Production Model Lifecycle Management

What Is Production Model Lifecycle Management?

Machine learning models have a complex lifecycle that includes frequent updates on production environments. The initial deployment of a machine learning model is only the beginning of a long and sometimes complicated process. The model lifecycle has several steps that include:

Model retraining – Production Model Monitoring identifies issues with model performance that lead to retraining the model on newer data. The model algorithm and tuning parameters are not changed. Once retrained, this new model version begins a testing process before it goes into production.

Model testing – A production model must maintain trust and meet rigorous criteria for performance against machine learning objectives. Each iteration of a model must run through model testing and validation steps independent of the training process. This is necessary to ensure that the new version maintains accuracy and is consistent with prior model versions.

Model warm-up – A production model must meet the performance criteria for production environments. Placing a model in warm-up mode using production infrastructure enables the MLOps team to observe the model under near real-world conditions to ensure that the model code will perform as expected. The model does not respond to live requests but does generate responses. Logging response time and accuracy, if possible, allow for comparison to the production model performance and SLAs.

Model challenger testing – When the data science team introduces a new, supposedly better model, production environment testing is necessary to see whether the new model can outperform the existing model. A champion/challenger or A/B testing framework allocates a percentage of the requests to the challenger model for comparison to the current champion.

Blue-green model deployment – The deployment of a new model version must not disrupt the requests from downstream business applications. The internal routing system must be seamlessly switched for the new model without interfering with outside processes or operations.

Model failover and fallback – Production models will encounter conditions where the model is not able to provide a valid response or where the inference process takes too long. In each case, the model should have a fallback value or failover model to give a response that meets your SLA. This fallback state can also be used during troubleshooting.

Model versioning and rollback – Sometimes, a model reaches production with a fatal issue. In this case, the production model is then rolled back to the prior version until a new version is ready. Note that when using proper validation and warm-up processes, these issues are greatly minimized.

Why Is Production Lifecycle Management Important?

To scale the use and value of machine learning models in production requires a robust and repeatable process for model lifecycle management. Without lifecycle management, models that reach production that deliver inaccurate data, poor performance, or unexpected results can damage your business’s reputation for AI trustworthiness. Production model lifecycle management is critically important to creating a machine learning operations (MLOps) system that allows a business to scale to hundreds or even thousands of models in production. Production lifecycle management also allows IT Operations to take responsibility for model testing updates in production, which can free data science resources to work on additional data science projects.

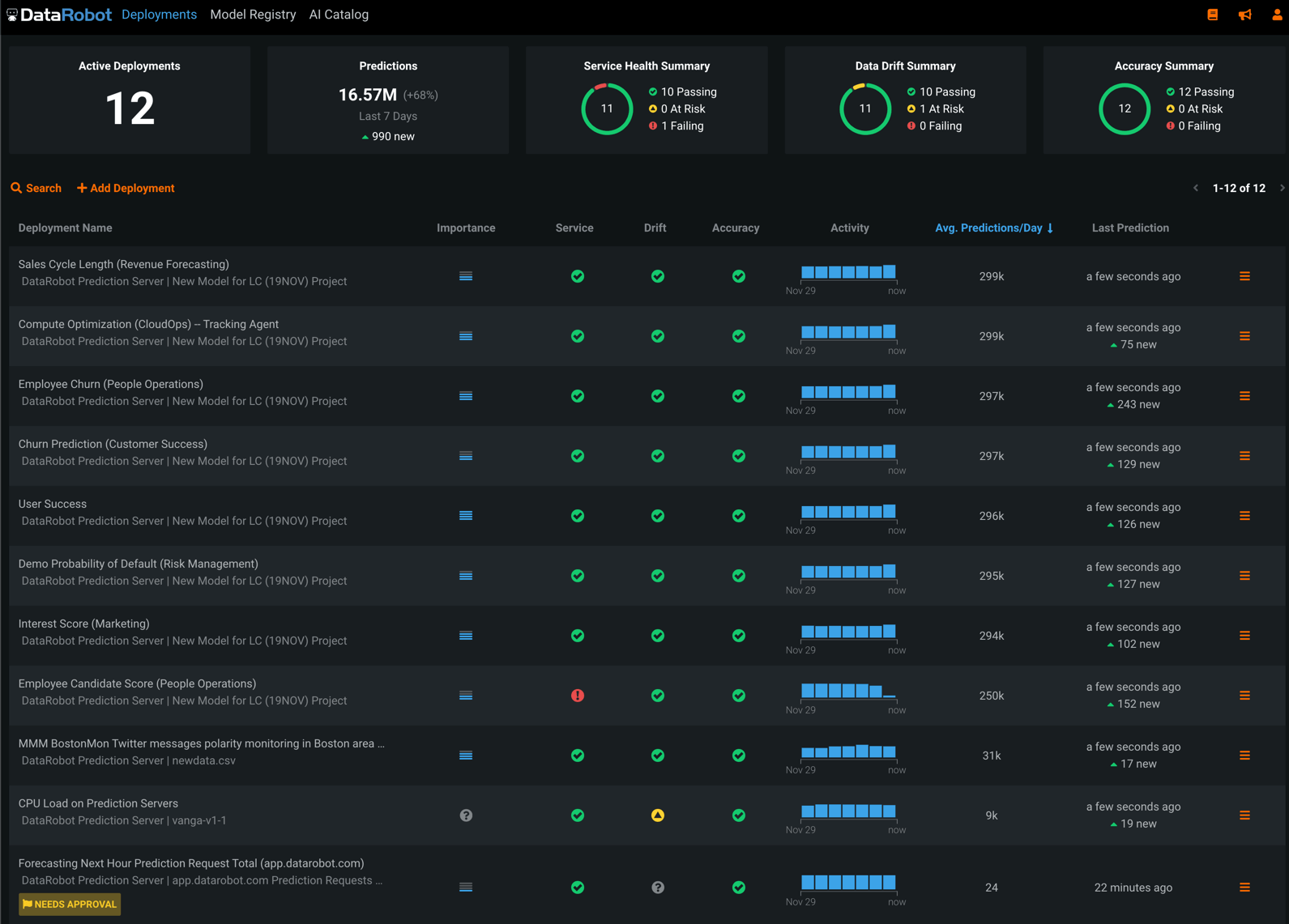

Production Model Lifecycle Management + DataRobot

DataRobot MLOps is a product that is available as part of the DataRobot AI Cloud platform. Model lifecycle management is one of the four critical capabilities of DataRobot MLOPs. With DataRobot MLOps, models built on any machine learning platform can be deployed, tested, and seamlessly updated in production without interrupting service to downstream applications.