Operate

Learn and Optimize

Collect valuable insights to continuously improve your predictive and generative AI solutions.

Start for FreeUnderstand if Your AI Solutions are Performing as Intended

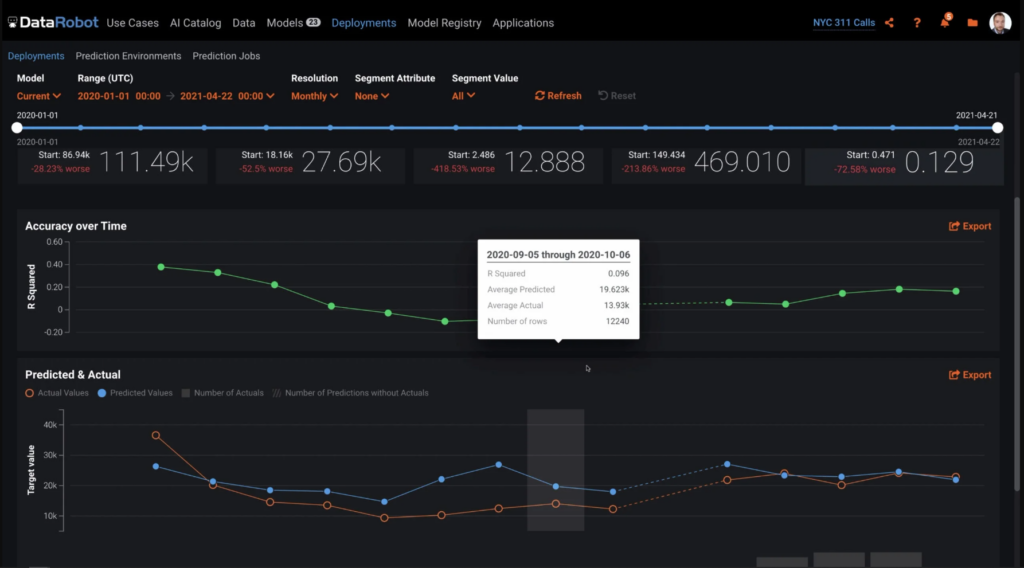

See what, why and how your predictive or generative AI are changing over time.

Continuously Optimize Based on Utilization

Collect valuable insights as generative AI models are leveraged by end users to continuously improve.

Compare, Challenge, and Replace Models Instantly

Compare multiple challenger models side-by-side. Hot swap production models with a single click.

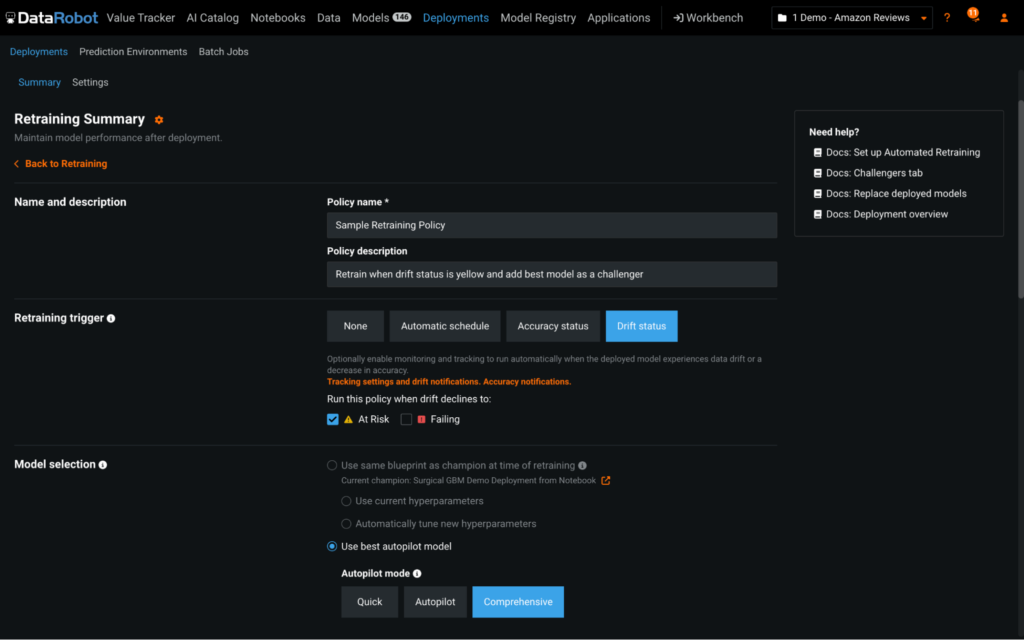

Retrain Proactively or in Response to a Trigger

Configure sophisticated retraining and intervention policies to put continuous improvement on autopilot.

Ensure Models Operate as Intended

By seamlessly combining generative and predictive AI into one unified experience, you can put ‘guard models’ in place to prevent hallucinations and ensure generative AI models are being utilized as intended.

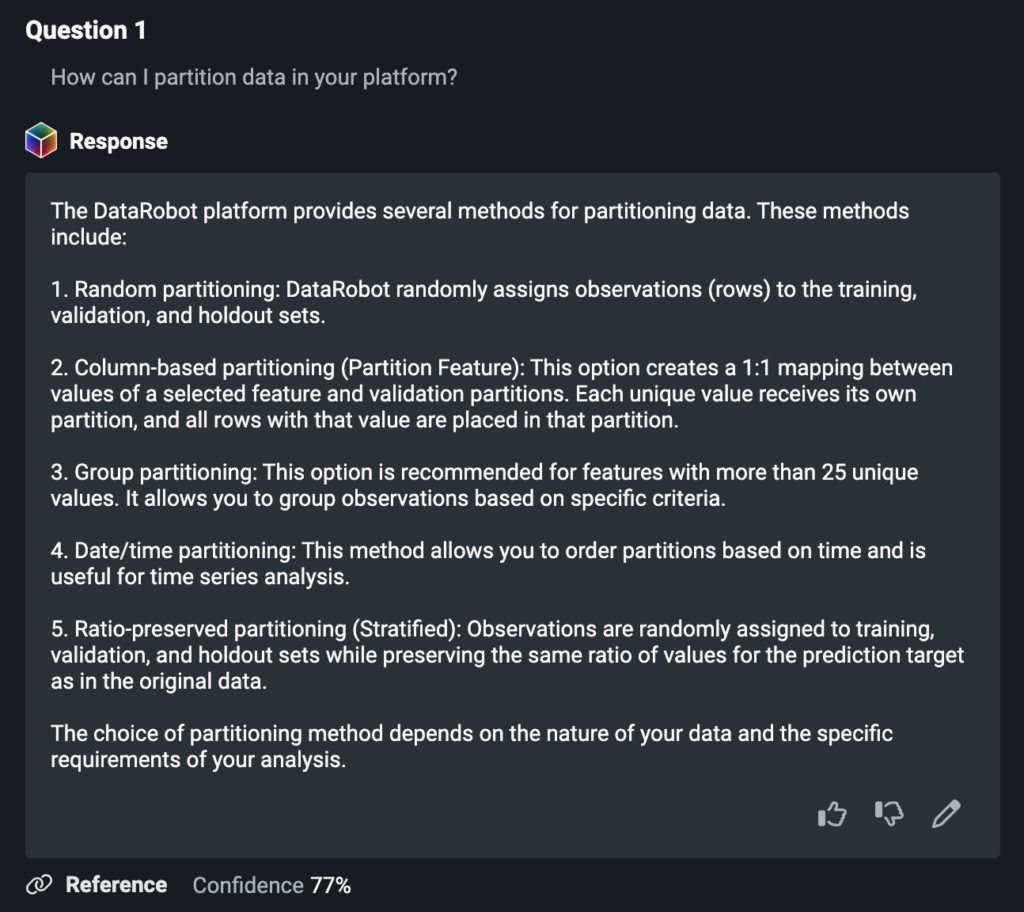

Stop harmful responses before they happen. Set up models to track or intervene when certain actions are taken, like toxic prompts, responses with incomplete information, or personal information leaks. Provide an assessment at the response level for metrics like correctness and sentiment. You can even leverage a built-in user feedback loop to continuously improve.

Continuously Optimize Based on Utilization

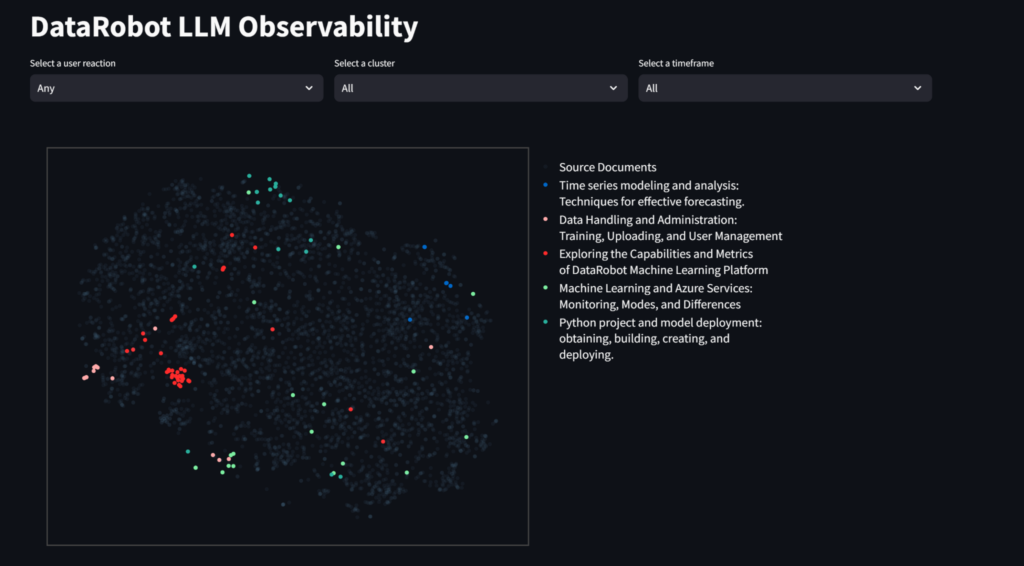

As your generative AI models are leveraged by end users, you can collect valuable insights to continuously improve.

Evaluate how well user questions and generative AI responses match the information in your vector database using insights that can be displayed through a Streamlit application. Ensure the database is comprehensive, relevant, and current while also evaluating its structure and content. You can also use insights to identify training opportunities within the organization for topics that consistently come up regularly in user interactions.

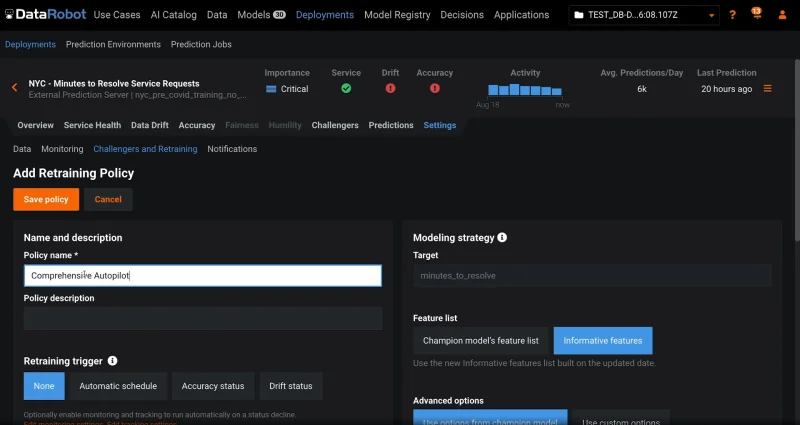

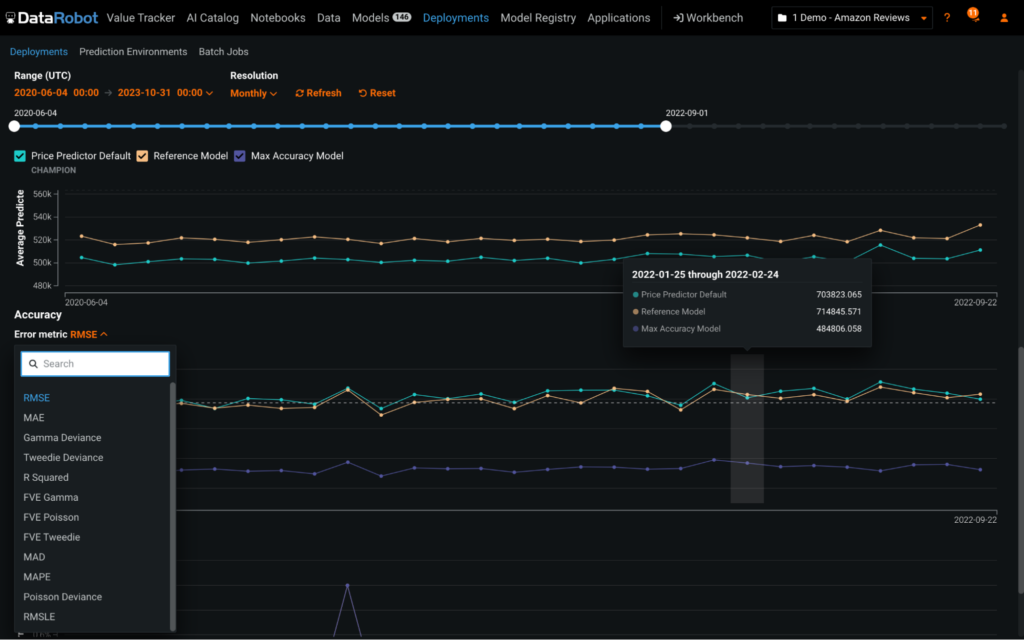

Challenge Your Models

Don’t let your production models get lazy. Analyze performance against your real-world scenarios to identify the best possible model at any given time. Bring your own challenger models with you or allow the DataRobot AI Platform to create them for you. Then, generate challenger insights for a deep and intuitive analysis of how well the challenger performs and how it measures up to the champion. Challenger comparisons can be used for Time Series, multiclass, and external models.

Assess Accuracy at a Glance

Check on your model accuracy at a glance using out-of-the-box and custom accuracy metrics. Upload actual outcomes after you make predictions to evaluate production model quality. Whether the true outcome is known seconds later, hours later or years later, associate the ground truth answer with the prediction via a unique prediction ID to unlock robust accuracy tracking for champion and challenger models alike.

Customizable Retraining Actions Based on Triggering Events

Keep your models well-optimized and learning from more recent data with customizable retraining policies for each deployment. You control the retraining timing based on the calendar or a trigger such as data drift or accuracy degradation. You control the scope of the new model search from a single blueprint, up to a running full AutoPitot on Comprehensive mode. You also control the action taken when retraining is completed, from simply saving the new model package, to adding it as a challenger in the deployment, to initiating a model replacement in accordance with your custom-defined replacement governance workflow.

Global Enterprises Trust DataRobot to Deliver Speed, Impact, and Scale

More AI Platform Capabilities

Take AI from Vision to Value

See how a value-driven approach to AI can accelerate time to impact.