Prediction Explanations

What are Prediction Explanations in Machine Learning?

Traditionally, machine learning models have not included insight into why or how they arrived at an outcome. This makes it difficult to objectively explain the decisions made and actions taken based on these models. Prediction Explanations avoid the “black box” syndrome by describing which characteristics, or feature variables, have the greatest impact on a model’s outcomes.

Why are Prediction Explanations Important for Machine Learning?

When the reasons behind a model’s outcomes are as important as the outcomes themselves, Prediction Explanations can uncover the factors that most contribute to those outcomes. For example, banks using models to determine whether or not they should approve a loan can use Prediction Explanations to gain insight into why an application was accepted or rejected. With that insight they can develop models that comply with regulations, easily explain model outcomes to stakeholders, and identify high-impact factors to help focus their business strategies.

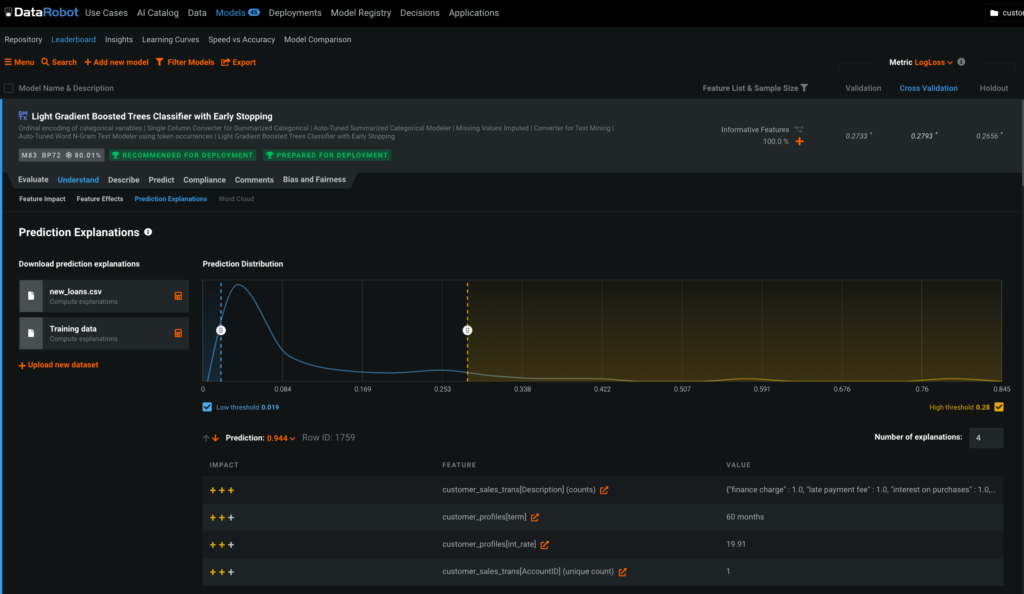

Prediction Explanations + DataRobot

DataRobot’s Prediction Explanations allow you to calculate the impact of a configurable number of features (the “reasons”) for each outcome your model generates. Once calculated, you can preview the top explanations or download the full results.

Each explanation is a feature from the dataset and its corresponding value, accompanied by a qualitative indicator of the explanation’s strength – whether it had a positive or negative influence on the final outcome.

From the example above, you could answer “Why did the model give one of the patients a 92.9% probability of being readmitted?” The explanations explain that the 8 inpatient visits, 28 medications, and the specific discharge disposition all had a strong positive effect on the (also positive) prediction.