Production Model Governance

What Is Production Model Governance?

When machine learning models become critical to business functions, new requirements emerge to ensure quality and to comply with legal and regulatory obligations. The deployment and modification of production models can have far-reaching impacts on customers, employees, partners, and investors so clear practices must be established to ensure they are managed consistently and to minimize risk.

Production model governance sets the rules and controls for machine learning models running in production, including access control, testing, and validation, change and access logs, and traceability of model results. With production model governance in place, organizations can scale machine learning investments and provide legal and compliance reports on the use of machine learning models.

Production model governance includes

Roles and responsibilities. One of the first steps in production model governance is to establish clear roles with duties within the production model lifecycle such as production model manager, production model administrator/approver, production model validator, production data scientist, production data engineer, etc. Each role description should include qualifications and capabilities and any training or certification requirements. Users may have more than one role.

Access control. To maintain control over production environments, access to production models and environments must be limited. Limitations can be implemented at the individual user level or via role-based access control (RBAC). In either case, a limited number of people will have the ability to update production data for model training, deploy production models, create A/B tests or modify production environments.

Change / audit logs. For legal and regulatory compliance, secure logging must be provided for access and changes to production systems. The ability to understand when a change was made and by whom is critical for compliance but is also very useful for troubleshooting when something goes wrong. Note that in an automated system, software applications or agents may perform updates based on triggers or schedules. In this case, these automated systems should be treated as users, and the system should record their actions along with other user actions.

Annotations. Simple recording of actions is necessary but insufficient to understand the motivations or users. For each change to production data, models, or systems, users should provide notes on why they took action that other users or auditors may find useful. Such records can also be beneficial for troubleshooting.

Production Testing and Validation. To ensure quality in production, processes should include testing and validation of each new or refreshed model before deployment. These tests and their results should be logged to show that the model was deemed ready for production use. Testing information will be required for model approval.

Model History / Version Library. Models will change over time as they are updated and replaced in production. Maintenance of complete model history, including model artifacts and changelogs, is critical for legal and regulatory needs.

Traceable model results. Each model result must be attributable back to the model and model version that generated that result to meet legal and regulatory compliance obligations. Traceability is especially critical because of the dynamic nature of the production model lifecycle that results in frequent model updates. At the time of a legal or regulatory filing, which could be months after an individual model response, the model in production may not be the same as the model used to create the prediction in question. A record of request data and response values with date and time information satisfies this requirement. Also, a model ID should be provided as part of the model response to make the tracking process easier.

Why Is Production Model Governance Important?

To scale the use and value of machine learning models requires a robust and repeatable production process, including clear roles, procedures, and logging to support established controls. This consistent process also dramatically reduces an organization’s operational, legal, and regulatory risk. In addition, logging shows that rules were followed and supports troubleshooting to resolve issues quickly, which increases trust and value from AI projects.

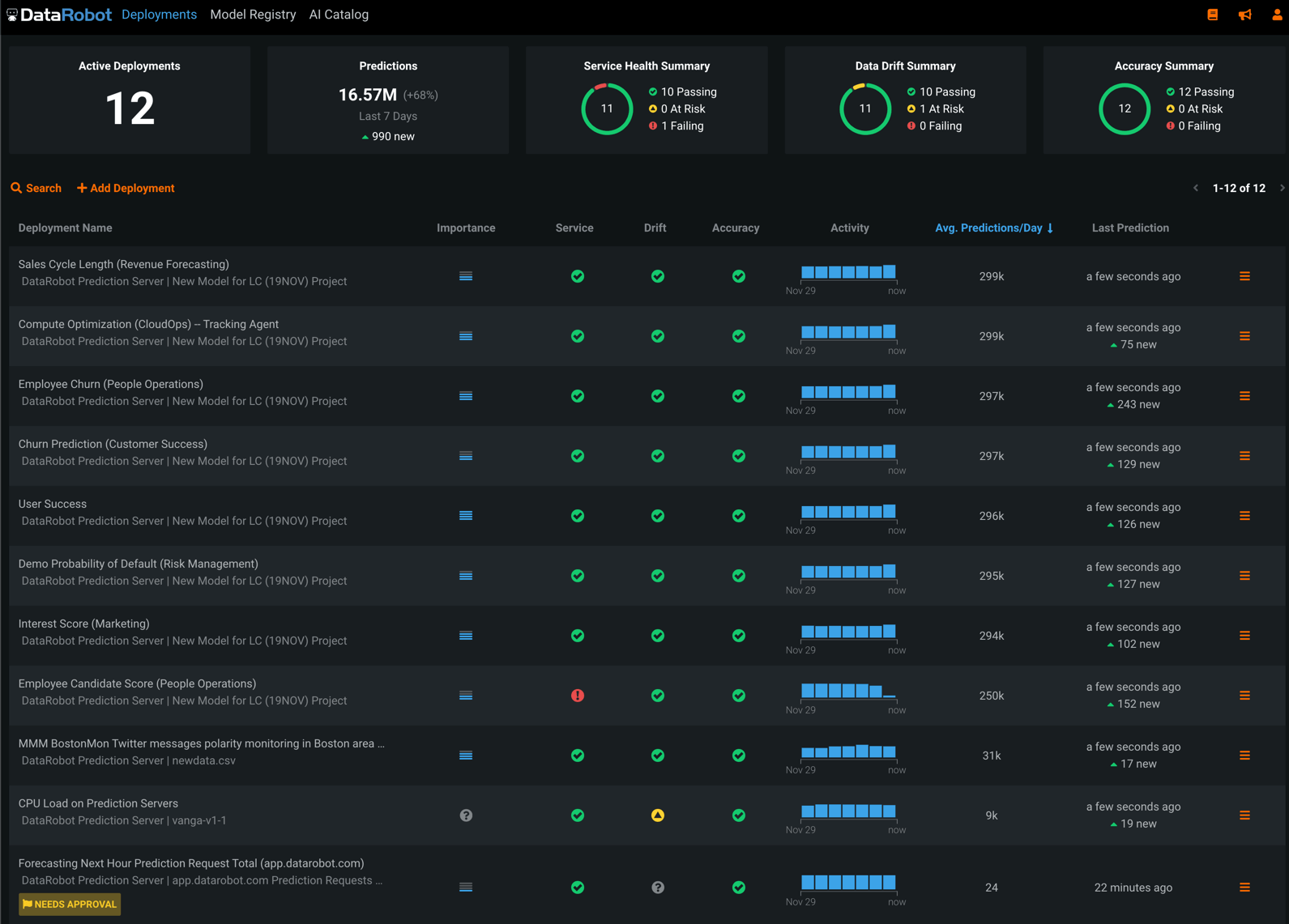

Production Model Governance + DataRobot

DataRobot MLOps is a product that is available as part of the DataRobot AI Cloud platform. Production model governance is one of the four critical capabilities of DataRobot MLOps — in addition to simplified model deployment, monitoring for machine learning, and production life cycle management. With DataRobot MLOps, models built on any ML platform can be deployed, tested, and seamlessly updated in production with full controls in place to manage access and to log information for legal and regulatory compliance.