Trusted AI 101: A Guide to Building Trustworthy and Ethical AI Systems

Introduction to the Dimensions of Trust

Artificial intelligence (AI) as a technology is maturing. Far from the stuff of science fiction, AI has moved from the exclusive regimes of theoretical mathematics and advanced hardware to an everyday aspect of life. Over the last several years of exponentially accelerating development and proliferation, our needs and requirements for mature AI systems have begun to crystallize.

At DataRobot, we define the benchmark of AI maturity as AI you can trust. Trust is not an internal quality of an AI system like accuracy, or even fairness. Instead, it’s a characteristic of the human-machine relationship formed with an AI system. No AI system can come off the shelf with trust baked in. Instead, trust needs to be established between an AI user and the system.

The highest bar for AI trust can be summed up in the following question: What would it take for you to trust an AI system with your life?

Fostering trust in AI systems is the great obstacle to bringing into reality transformative AI technologies like autonomous vehicles or the large-scale integration of machine intelligence into medicine. To neglect the need for AI trust is also to downplay the influence of the AI systems already embedded in our everyday financial and industrial processes, along with the increasing interweaving of our socioeconomic health and algorithmic decision-making. AI is far from the first technology required to meet such a high bar. The path to the responsible use of AI has been paved by industries as diverse as aviation, nuclear power, and biomedicine. What we’ve learned from their approaches to accountability, risk, and benefit forms the foundation of a framework for trusted AI.

Industry leaders and policymakers have begun to converge on shared requirements for trustworthy, accountable AI. Microsoft calls for fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. IBM calls for explainability, fairness, robustness, transparency, and privacy. The United States House of Representatives Resolution 2231 seeks to establish a requirement of algorithmic accountability, addressing bias and discrimination, a risk-benefit analysis and impact assessment, and issues of security and privacy. The government of Canada has put out its own Algorithmic Impact Assessment tool that is available online. These proposals all have far more principles in common than they have divergent ones. At DataRobot, we share in this vision and have defined our 13 dimensions of AI Trust to pragmatically inform the responsible use of AI.

The challenge now is to translate those guiding principles and aspirations into implementation, and make it accessible, reproducible, and achievable for all who engage with the design and use of AI systems. This is a tall order but far from an insurmountable obstacle. This document will not be a statement of principles for trustworthy AI, but rather will do a deep dive into practical concerns and considerations and the frameworks and tools that can empower you to address them. We’ll approach these principles through the dimensions of AI Trust, and we will detail them in the sections that follow.

What Do We Mean by “Dimensions of Trust”?

At DataRobot, we sort trust in an AI system into three main categories.

- Trust in the performance of your AI/machine learning model.

- Trust in the operations of your AI system.

- Trust in the ethics of your workflow, both to design the AI system and how it is used to inform your business process.

Within each of these categories, we identify a set of dimensions that help define them more tangibly. Trust is an umbrella concept, so some of these dimensions are at least partially addressed by existing functionality and best practices in AI, such as MLOps. Combining each dimension together holistically constitutes a system that can earn your trust.

It’s worth acknowledging that trust in an AI system varies from user to user. For a consumer-facing application, the requirements of trust for the business department, who created and owns the AI app, are very different from those of the consumer who interacts with it potentially on their own home devices. Both sets of stakeholders need to know that they can trust their AI system, but what trust signals are needed and available for each will be quite different.

Trust signals refer to the indicators you can seek out in order to assess the quality of a given AI system along each of these dimensions. But trust signals are not unique to AI– it’s something that we all use to evaluate even human-to-human connections. Think about what kinds of trust signals you intentionally seek out when meeting a new business partner. It will vary person to person, but we all recognize that eye contact is important, especially as a sign that someone is paying attention to you while you speak. For some people, a firm handshake is meaningful, and for others, punctuality is vital; a minute late is a sign of thoughtlessness or disrespect. Reflective language is a powerful way to signal that you are listening. To complicate things, think about how trust signals change when evaluating a new acquaintance as a potential friend compared to a business partner. There will be some overlap, but what you’re more likely to seek are shared interests or a similar sense of humor, rather than common goals and incentives. What would you look for in a new doctor? Beside bedside manner, you may check their diploma on the wall or look their office up online for ratings, reviews, and patient testimonials.

How does this relate to an AI system? Depending on its use, an AI system might be comparable to any of these human relationships. An AI that is embedded in your personal banking is one that you need to be able to trust like a business advisor. An AI system that is powering the recommendation algorithm for your streaming television service needs to be trustworthy like a friend who shares your genre interests and knows your taste. A diagnostic algorithm must meet the credentials and criteria you would ask of a medical specialist in the field, and be as open and transparent to your questions, doubts, and concerns.

The trust signals available from an AI system are not eye contact or a diploma on the wall, but they serve the same need. Particular metrics, visualizations, certifications, and tools can enable you to evaluate your system and prove to yourself that it is trustworthy.

In the sections that follow, we will address each dimension of AI trust within the categories of Performance, Operations, and Ethics.

AI Performance

Performance relates to the question, “How well can my model make predictions based on data?” Within Performance, the trust dimensions we address are:

- Data quality – What recommendations and assessments, in particular, can be used to verify the origin and quality of the data used in an AI system? How can identifying gaps or discrepancies in the data help you build a more trustworthy model?

- Accuracy – What are the ways “accuracy” is measured in machine learning? What trust signals–tools and visualizations–are appropriate to define and communicate the performance of your model?

- Robustness & Stability – How do you ensure that your model will behave in consistent and predictable ways when confronted with changes or messiness in your data?

- Speed – How should the speed of a machine learning model inform model selection criteria and AI system design?

AI Operations

Operations relates to the question, “How reliable is the system that my model is deployed on?” Within Operations, which looks beyond the model to the software infrastructure and people around it, we focus on:

- Compliance – In industries with regulatory compliance requirements around AI, how do you best facilitate the verification and validation of your AI system, and bring value to your enterprise as intended?

- Security – Large amounts of data are analyzed or transmitted with AI systems. What infrastructure and best practices keep your data and model(s) secure when creating and operationalizing AI?

- Humility – Under what conditions might an AI system’s prediction exhibit uncertainty? How should your system respond to uncertainty?

- Governance and Monitoring – Governance in AI is the formal infrastructure of managing human-machine interaction. How do you support principles like disclosure and accountability in the management of your AI system? Who is responsible for monitoring the model over time?

- Business Rules – How do you make an AI system a valued member of your team and seamless part of your business process?

AI Ethics

Ethics relates to the question, “How well does the model align with my values? What is the real impact of my model on the world?” In the context of AI, Ethics benefits from a concrete, systematic approach despite its inherent complexity and subjectivity. Within Ethics, we cover:

- Privacy – Individual privacy is a fundamental right, but it is also complicated by the use and exchange of data. What role does AI play in the management of sensitive data?

- Bias and Fairness – How can AI systems be used to promote fairness and equity in our decision-making? What tools can be used to help define what values you want reflected in your AI system?

- Explainability and Transparency – How can these two linked properties facilitate the creation of a shared understanding between machine and human decision-makers?

- Impact – How can you evaluate the real value that machine learning adds to a use case? Why is it useful or necessary? What impact does it have on your organization, and on the individuals affected by its decisions?

Mature and responsible use of AI requires a holistic understanding of the technical model, the infrastructure that houses it, and the context of its use case. These dimensions of trust are not a checklist, but rather critical guideposts. If an AI project is carefully evaluated along each dimension, we believe it has a far greater chance of achieving its intended impact without unanticipated repercussions. This framework informs the features and design of the DataRobot AI Platform so that DataRobot can best enable all of our users to establish trust with their AI systems. However, we have published this information with recommendations independent from the DataRobot platform itself, because it is vital for all AI creators, operators, and consumers to find accessible tools to trust and understand AI.

DataRobot – The Trusted AI Platform

DataRobot’s AI Platform supports the end-to-end modeling lifecycle, with capabilities facilitating all steps of the development, deployment, and monitoring of AI and machine learning systems. When it comes to the responsible use of AI, DataRobot strives to answer the question: What does it take to trust an AI system with your life? The Trusted AI team at DataRobot is dedicated to incorporating features and tools into the platform and modeling workflow that make trustworthy, responsible, accountable, and ethical AI accessible and standardized.

Built-in Model Development Guardrails

Target leakage detection – Automatically detect target leakage, in which a feature provides information that should not be accessible to the model at the time of prediction. DataRobot will automatically generate a “Leakage Removed” feature list recommended for modeling.

Robust testing and validation schema – Stably assess model performance through automatically recommended but custom tailorable k-fold cross-validation and holdout, out-of-time validation, or backtesting partitioning schemes, implemented across competitive models on the leaderboard for true apples-to-apples comparison. Additionally, rank performance on an external test set across the leaderboard.

Explainable AI – Use insights like Feature Impact, Feature Effects, or row-level Prediction Explanations to understand and verify the operation of a model.

Bias and Fairness

Define the appropriate fairness metric – Deciding the right way to define what’s fair for a given use case can be complicated, especially as some metrics are incompatible. DataRobot guides users through a series of questions to determine which of eight supported metrics is the most relevant for a given use case.

Detect and measure bias automatically – With a metric defined, DataRobot will automatically surface the results of a bias test on any model calculated across the defined protected classes within it (i.e., race, age, gender, etc.).

Investigate the source of bias – The Cross-Class Disparity tool enables a user to look deeper into the underlying distributions of features by protected class to identify how the data may be contributing to any bias observed.

Negotiate the bias and accuracy tradeoff – The Bias versus Accuracy chart transforms the leaderboard into a two-dimensional visualization, with the chosen bias metric as the new horizontal axis, enabling the user to choose which model to deploy, taking into account the complexities of both performance and potential for bias simultaneously.

Ensure Compliance

Automatically generate compliance documentation – DataRobot will automatically supply well-formatted compliance documentation, detailing the data and the modeling approach transparently and incorporating UI visualization tools like the Lift Chart or Feature Impact to aid in establishing a clear understanding of model design and operations for regulators. The compliance documentation can also be customized into the appropriate template for a use case.

Approval workflows and user-based permissions – Maintain governance with user-based permissions in the development and deployment of an AI and machine learning model.

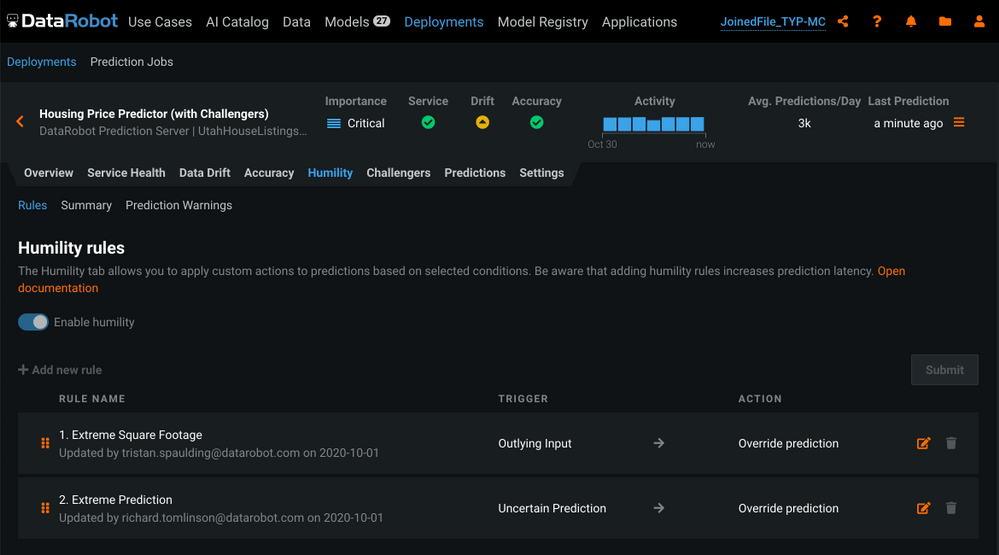

Incorporate Humility into Predictions

Identify conditions in which a model’s prediction may be uncertain – Not all predictions are made with the same level of certainty. In real-time, Humble AI can detect the presence of certain characteristics in new scoring data and the model’s predictions to flag a prediction as likely to be uncertain.

Create rules to trigger automated actions to protect your business processes – Using Humble AI to identify trigger conditions, the user can establish rules that associate conditions with automated actions, such as overriding a prediction or returning an error.