Explainability as a Dimension of Trusted AI

Explainability is a critical element of trustworthiness in AI. Find out about challenges to explaining model behavior and the importance of interpretability and transparency.

Explainability Is Integral to Trusted AI

Explainability is one of the most intuitively powerful ways to build trust between a user and a model. Whether you’re making the case for the productionalization of a model or rationalizing an individual recommendation of your AI system, being able to interpret how the model works and makes decisions is a major asset to your final evaluation.

What Does It Mean for a Model to Be “Explainable”?

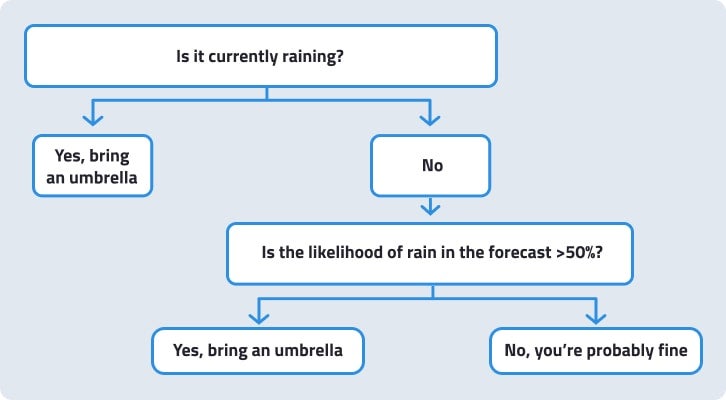

A simple linear regression model with coefficients assigned to each variable is fairly direct to interpret. Similarly, a simple decision tree, as visualized in Figure 1, can be intuitively understood by just inspecting it. These are models for which full transparency—that is, having access to every parameter that composes their design—is tantamount to their being explainable.

The simple decision tree example answers the question: Should I bring an umbrella when I go out today? You can think of this as a model, evaluating one binary variable (“Is it currently raining?”) and one numeric one (the probability of rain in the forecast) to make its recommendation. This is a snapshot of the basic machinery on which more advanced tree-based algorithms are built. At this baseline level of complexity, it is fully explainable to human cognition when transparent, with no additional assistance or insight needed.

But most machine learning models are built on more complex algorithms: for example, a Random Forest model is actually an ensemble of dozens of decision trees. With that increase in complexity, a model’s transparency becomes disconnected from its explainability. Knowing the weights assigned to each node of a neural net will not alone facilitate its interpretation even by an advanced data scientist. This does not mean that it is impossible to explain these complex models or understand their decisions. Instead, you must apply a different toolset to ensure explainable AI.

How Can I Make a Model Explainable?

Many types of insights can be used to assist model explainability:

- Variable importance techniques work at the level of the model’s overall decisions, and score the aggregated predictive power of each feature.

- Partial dependence plots can be used to illustrate how a given feature impacts the model’s prediction across the possible range of its values.

- Prediction explanations identify the most impactful individual factors for a single prediction. Two common methods of calculating them, XEMP and SHAP, are supported in DataRobot.

Explainability Is Just a Piece of the Puzzle

Explainability is just one of the dimensions of trusted AI that support ethics. The full list includes the following: